-

Bug

-

Resolution: Fixed

-

Blocker

-

Jenkins 1.477.2

Master and Slaves Windows Server 2008 r2

(Also on Jenkins 1.488 Windows Server 2008)

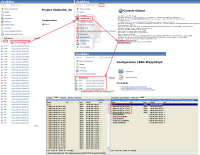

We have recently noticed builds disappearing from the "Build History" listing on the project page. Developer was watching a build, waiting for it to complete and said it disappeared after it finished. Nothing was noted in any of the logs concerning that build.

The data was still present on the disk and doing a reload from disk brought the build back. We have other automated jobs that deploy these builds based on build number, so it is pretty big issue in our environment.

We are not able to reproduce at this point, but I still wanted to document what was happening.

I have seen other JIRA issues that look similar, but in those jobs were disappearing after a restart, or upgrade. That is not the case for us. The build disappears after completion, success or failure.

- is duplicated by

-

JENKINS-16018 Not all builds show in the build history dashboard on job

-

- Resolved

-

-

JENKINS-16735 All builds are gone since I copied an existing project

-

- Resolved

-

-

JENKINS-15533 Envinject plugin incompatibility with Jenkins 1.485

-

- Resolved

-

-

JENKINS-15719 Builds and workspace disappear for jobs created after upgrade to 1.487

-

- Resolved

-

-

JENKINS-16117 sporadical unwanted suppression of build artifacts

-

- Resolved

-

-

JENKINS-16175 Dashboard not showing job status

-

- Resolved

-

-

JENKINS-15594 CopyArtifact plugin cannot always copy artifacts

-

- Closed

-

- is related to

-

JENKINS-18678 Builds disappear some time after renaming job

-

- Resolved

-

-

JENKINS-21268 No build.xml is created when warnings plugin is used in combination with deactivated maven plugin

-

- Resolved

-

-

JENKINS-8754 ROADMAP: Improve Start-up Time

-

- Closed

-

-

JENKINS-16845 NullPointer in getPreviousBuild

-

- Resolved

-

-

JENKINS-17265 Builds disappearing from history

-

- Resolved

-

-

JENKINS-23130 nextBuildNumber keeps being set to previous numbers

-

- Resolved

-

-

JENKINS-23152 builds getting lost due to GerritTrigger

-

- Resolved

-

I had this problem but after upgrading to Jenkins 1.486 all was back to normal