-

Improvement

-

Resolution: Unresolved

-

Blocker

-

Powered by SuggestiMate

I retrieve the pipeline script from SCM. See attached screenshot for context.

Now it would be helpful to be able to use the value of (at least) "Stream Codeline" inside my pipeline script.

As far as I understand this is not possible so far?

- Stream_Codeline.png

- 73 kB

- relates to

-

JENKINS-72481 Expose Stream as environment variable

-

- Open

-

-

JENKINS-73856 DOC: Document JENKINSFILE_PATH and limitations

-

- Open

-

[JENKINS-39107] expose "Stream Codeline" as an environment variable

Using the following Jenkinsfile the global library is used and the correct stream is derived:

@Library('my-shared-library') import org.foo.lib.*pipeline { agent { label 'Windows10' } stages { stage("Repro") { steps { script { echo "This library just prints HELLO FROM testFunc version 1." def z = new org.foo.lib() z.testFunc() echo "This shows that the slave contains the correct files with an explicit sync." bat 'dir' bat 'echo WS IS %P4_CLIENT%' echo "This tries to get the stream name" def p4 = p4(credential: 'JenkinsMaster', workspace: staticSpec(charset: 'none', name: env.P4_CLIENT)) def myClient = p4.fetch('client', env.P4_CLIENT) println ("Stream is: " + myClient.get("Stream") ) } } } } }

This results in:

--- cut --- P4 Task: syncing files at change: 25 ... p4 sync -q C:\filestore\Jenkins\workspace\Pipeline/...@25 + duration: (139ms)P4 Task: saving built changes. ... p4 client -o jenkinsTemp-fb4e3cb9-0625-4a97-b83b-f9003f7e4cc4 + ... p4 info + ... p4 info + ... p4 client -i + ... done[Pipeline] } [Pipeline] // stage [Pipeline] withEnv [Pipeline] { [Pipeline] stage [Pipeline] { (Repro) [Pipeline] script [Pipeline] { [Pipeline] echo This library just prints HELLO FROM testFunc version 1. [Pipeline] echo HELLO FROM testFunc version 1 [Pipeline] echo This shows that the slave contains the correct files with an explicit sync. [Pipeline] bat [Pipeline] Running batch scriptC:\filestore\Jenkins\workspace\Pipeline>dir Volume in drive C has no label. Volume Serial Number is 8EBB-387C Directory of C:\filestore\Jenkins\workspace\Pipeline05/11/2018 15:07 <DIR> . 05/11/2018 15:07 <DIR> .. 05/11/2018 15:07 752 Jenkinsfile 05/11/2018 15:07 <DIR> libs 05/11/2018 15:07 14 test.c 05/11/2018 15:07 14 test.h 05/11/2018 15:07 14 test1.c 05/11/2018 15:07 14 test1.h 5 File(s) 808 bytes 3 Dir(s) 82,407,059,456 bytes free [Pipeline] bat [Pipeline] Running batch scriptC:\filestore\Jenkins\workspace\Pipeline>echo WS IS jenkinsTemp-fb4e3cb9-0625-4a97-b83b-f9003f7e4cc4 WS IS jenkinsTemp-fb4e3cb9-0625-4a97-b83b-f9003f7e4cc4 [Pipeline] echo This tries to get the stream name [Pipeline] p4 [Pipeline] echo Stream is: //streams/main [Pipeline] } [Pipeline] // script [Pipeline] } [Pipeline] // stage [Pipeline] } [Pipeline] // withEnv [Pipeline] } [Pipeline] // node [Pipeline] End of Pipeline Finished: SUCCESS

I have tried with the "node" element and this does not work. No files are synced!

@Library('my-shared-library') import org.foo.lib.*pipeline { node{ 'Windows10' } stage("Repro") { echo "This library just prints HELLO FROM testFunc version 1." def z = new org.foo.lib() z.testFunc() echo "This shows that the slave contains the correct files with an explicit sync." bat 'dir' bat 'echo WS IS %P4_CLIENT%' echo "This tries to get the stream name" def p4 = p4(credential: 'JenkinsMaster', workspace: staticSpec(charset: 'none', name: env.P4_CLIENT)) def myClient = p4.fetch('client', env.P4_CLIENT) println ("Stream is: " + myClient.get("Stream") ) } }

Confirmation that the workaround is not valid when using scripted pipeline. Scripted pipeline does not do the implicit sync so P4CLIENT is either null or if using global libraries the temporary client for the global library.

However as P4CLIENT is not set it's questionable if 'P4_STREAM' (if it existed) would be availble until 'p4sync' is invoked which would need to know the STREAM name to run.

Might be able to store the Perforce Depot path to the Jenkinsfile. (Need to consider lightweight checkout where no client is used)

Exposed jenkinsfile path as env variable irrespective of streams

This feature does not work as expected.

ff you have a global library import, the variable "JENKINSFILE_PATH" contains the path to the global library + "/Jenkinsfile"

Tried different ways with a multibranch pipeline using global library and Jenkinsfile. Here are the findings:

If the Jenkinsfile is doing a skipDefaultCheckout, the JENKINSFILE_PATH is not set. This is observed irrespective of whether the pipeline uses global library or not.

If the pipeline uses global library without skipDefaultCheckout, the JENKINSFILE_PATH environment variable is set correctly.

Update: This feature is still being asked for and needed.

Example:

Need to make build descions based on the purpose of the stream which is stored in the stream name.

This one is also quite old and still really missing: could you recheck the priorities?

Hi heiko_nardmann - You commenting on it should highlight it to the developers but I will send them a direct message also.

Hi heiko_nardmann I am the PO for the P4 plugin. I have tested a simple pipeline and the 'JENKINSFILE_PATH' variable contains the Jenkinsfile path when lightweight checkout is not enabled and there is no skipping of default checkout. Can you explain your use case and what are these settings for it?

First ... probably you already have talked with Karl?

Then ... I use lightweight checkout. And I use a library which seems to complicate things based on the previous comments inside this issue.

Currently I define inside the Jenkinsfile which stream to use by my pipeline. Typically this is the same stream that the Jenkinfiles originates from. So having this additionally inside the Jenkinsfile is redundant. And it means that after cloning a set of jobs for a new branch I need to adjust the affected Jenkinsfiles which is work to be avoided. And one also has to think of it and don't forget it.

I have to admit that I didn't test this for a while - as no one has stated an improvement has happened and also as I didn't see anything inside the release notes pointing me in this direction.

Please also see case 00776130.

Hi heiko_nardmann thanks for sharing the case, it sheds a lot of light on what is happening. The 'JENKINSFILE_PATH' is only populated correctly under a very minute subset of the use cases. We will have to figure out a way of exposing this regardless of lightweight checkouts, library imports, etc.

I will get back to you on the timeline for this once I meet with my engineering team and define priorities, but this would be considered as part of next feature release. Will reach out once we are able to test this. Would be great if you can lend us your scenario for testing this. Thanks a lot

Based on Karls comment - dated 2018-11-05 16:10 - it looks like the support already sth. to use for testing the problem.

skarwa - The reproduction example mentioned above comes from Zoom meetings with heiko_nardmann. It's been a long time since I tested this but if there is not enough information above just let me know.

Hi skarwa ,

I assume that during the last two month you setup priorities as stated inside your last comment here?

Can we expect this to be fixed - or at least improved - in the next release? Or what are your plans now?

Hi heiko_nardmann yes, this is on the list to be fixed, once we are out with the release of JENKINS-65246 we will be taking this up, the focus of analysis would be importing global libraries and why it messes up the JENKINSFILE_PATH and if possible to do so, also populate this variable even with lightweight checkout. Thanks for following up!

Fixed in the Release 1.14.4. When skipDefaultCheckout and lightweight checkout are selected jenkinsfile path will be available in JENKINSFILE_PATH. Assuming the Jenkinsfile is available in the root directory of the project.

That's a nice present right after Diwali and right before Xmas ... ![]()

I'll update to 1.14.4 this weekend.

heiko_nardmann I just updated to 1.14.4 but still cannot find any environment-variable to get the stream-line. How can I access the streamAtChange in my Jenkins pipleline-script? I found no description in https://github.com/jenkinsci/p4-plugin/blob/master/docs/P4GROOVY.md

I've to admit that after updating the plugin beginning of Dec I did not find the time yet to look into this further. But ... as I as part of my pipeline code dump the environment I see

So based on the path of the Jenkinsfile you can derive the stream.

But you are right: having some

STREAM_AT_CHANGE

inside the environment would be far clearer. And technically probably also better as no revision of the stream spec is given inside

JENKINSFILE_PATH

. It's just that this hasn't been part of this ticket.

Please raise a new one - I'll definitely vote for it ... ![]()

skumar7322 : there is still a problem: right at the beginning the value inside env.JENKINSFILE_PATH is wrong. E.g. I see

//navigation/jenkins/build_env/Jenkins/src/shared/CEMsg/testframe/CEMsg.Jenkinsfile.AITI-357

and I expect

//navigation/navapps/src/shared/CEMsg/testframe/CEMsg.Jenkinsfile.AITI-357

The path "//navigation/jenkins/build_env/Jenkins" is the one pointing to my pipeline library (e.g. containing the "vars" folder).

The prefix "//navigation/jenkins" is the corr.path of the stream. See

The path "//navigation/navapps" contains the project source code.

Please analyze again.

Maybe the method task() from class WhereTask needs to be used somehow?

Hi heiko_nardmann ,

I tried to reproduce the issue as follows:

External Library Path: //depot/JenkinsFilePath/lib

Stream Name: JenkinsFilePath

Stream path: //stream_depot/JenkinsFilePath

Jenkinsfile path: //stream_depot/JenkinsFilePath/Jenkinsfile

The checkout step in the script uses this stream to get the source code and populate the JENKINSFILE_PATH variable with the expected path of the Jenkinsfile.

Could you please share the path that is used to populate the workspace in the checkout step?

Did you take my communication as part of the case into account already?

Not sure whether this is stored as part of case 01032818 or the older 00776130; maybe you ask Karl ... ?

The last time that I've checked the tests for the plugin I didn't find any corr. test case using a library (esp. looking at WorkflowTest.java, EnvVariableTest.java, JenkinsfileTest.java) and then checking JENKINSFILE_PATH before the actual pipeline. Did I miss something? Otherwise this definitely needs to be done as a first step.

Thank you heiko_nardmann for your time on the meeting. With your help I was finally able to reproduce this.

Reproductions steps:

(1) Create streams depot called 'library' and stream called '//library/main'.

(2) Add following file as '//library/main/src/org/foo/lib.groovy':

package org.foo; def dispEnv () { echo "All Environment Variables" if (isUnix()) { sh 'env' } else { bat 'set' } return this;

(3) Create a stream depot for the source code called 'streams' and a stream called '//streams/main'.

(4) Add the following Jenkinsfile as '//streams/main/Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107'.

@Library('stream-lib') import org.foo.lib.* echo "Outside pipeline in Jenkinsfile:" echo "Variable is:" echo "env.JENKINSFILE_PATH=${env.JENKINSFILE_PATH}" pipeline { agent any stages { stage("Repro") { steps { script { echo "Inside pipeline in Jenkinsfile:" echo "Variable is:" echo "env.JENKINSFILE_PATH=${env.JENKINSFILE_PATH}" } } } } }

(5) Create a Legacy SCM library called 'stream-lib' that uses the manual workspace spec '//library/main/... //${P4_CLIENT}/...'.

(6) Create a pipeline job that uses the manual workspace spec '//streams/main/... //${P4_CLIENT}/...' and 'Script path' of 'Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107'.

(7) Run the job. It shows:

Pipeline] Start of Pipeline

[Pipeline] echo

Outside pipeline in Jenkinsfile:

[Pipeline] echo

Variable is:

[Pipeline] echo

env.JENKINSFILE_PATH=//library/main/Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107

Which is incorrect. The Jenkinsfile is under //stream/main. The block inside 'pipeline' is correct:

[Pipeline] echo (hide)

Inside pipeline in Jenkinsfile:

[Pipeline] echo

Variable is:

[Pipeline] echo

env.JENKINSFILE_PATH=//streams/main/Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107

Screenshots:

Library definition

Job definition

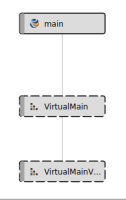

The JENKINSFILE_PATH variable solves most of this problem but does not solve the problem for Virtual streams.

With a Virtual stream the file does not exist in the stream it exists in its parent. For example:

Run 'p4 files' to list the files in each stream:

$ p4 files //streams/main/...Jenkinsfile //streams/main/Jenkinsfiles/Jenkinsfile#3 - edit change 330 (text) $ p4 files //streams/VirtualMain/...Jenkinsfile //streams/VirtualMain/...Jenkinsfile - no such file(s). $ p4 files //streams/VirtualMainVirtualChild/...Jenkinsfile //streams/VirtualMainVirtualChild/...Jenkinsfile - no such file(s).

but when you use a workspace attached to the Virtual stream you get the files (from the parent):

$ p4 switch //streams/VirtualMainVirtualChild $ p4 files ... //streams/main/Jenkinsfiles/Jenkinsfile#3 - edit change 330 (text)

Reproductions steps:

(1) Create streams depot called 'library' and stream called '//library/main'.

(2) Add following file as '//library/main/src/org/foo/lib.groovy':

package org.foo; def dispEnv () { echo "All Environment Variables" if (isUnix()) { sh 'env' } else { bat 'set' } return this;

(3) Create a stream depot for the source code called 'streams' and a stream called '//streams/main'.

(4) Add the following Jenkinsfile as '//streams/main/Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107'.

@Library('stream-lib') import org.foo.lib.* echo "Outside pipeline in Jenkinsfile:" echo "Variable is:" echo "env.JENKINSFILE_PATH=${env.JENKINSFILE_PATH}" pipeline { agent any stages { stage("Repro") { steps { script { echo "Inside pipeline in Jenkinsfile:" echo "Variable is:" echo "env.JENKINSFILE_PATH=${env.JENKINSFILE_PATH}" } } } } }

(5) Create a child stream from //streams/main of type 'Virtual' called '//streams/virtual'

(6) Create a Legacy SCM library called 'stream-lib' that uses the manual workspace spec '//library/main/... //${P4_CLIENT}/...'.

(7) Create a pipeline job that uses the manual workspace spec '//streams/virtual/... //${P4_CLIENT}/...' and 'Script path' of 'Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107'.

(8) Run the job. It shows the file is in 'main':

Pipeline] Start of Pipeline

[Pipeline] echo

Outside pipeline in Jenkinsfile:

[Pipeline] echo

Variable is:

[Pipeline] echo

env.JENKINSFILE_PATH=//streams/main/Jenkinsfiles/Jenkinsfile-Repro-JENKINS-39107

This is technically correct but does not highlight that the job ran in the Virtual stream called //stream/Virtual.

Hi,

I tried today with version 1.16.0 with a multibranch project and there is no JENKINSFILE_PATH variable. Edit: The JENKINSFILE_PATH env variable was there AFTER call of checkout scm in script. Nevertheless, it would be very nice if there is just a environment-variable which contains the StreamAtChange, not the full Jenkinsfile path.

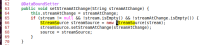

The developer feedback has led me to do my own analysis of the source code. I'm no Java expert - more C++ and Python.

Wrt. virtual streams ... when specifying a virtual stream inside a job configuration like

how is that one resolved to the "physical" stream? Resp. where is this information about the virtual stream lost?

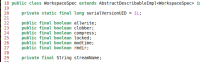

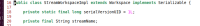

From looking at the source code I see that there is a class StreamSource with a method getStream():

Isn't that one used during the whole process? At least that is my impression based on the corr. jelly file:

So if the class StreamSource contains the original value ... it is only used in P4Step:

Then the method getWorkspace() from StreamSource seems to be used to create a workspace based on this stream:

So this instance of StreamWorkspaceImpl seems to still contain the original name of the virtual stream:

I also see that inside class WorkspaceSpec there is a member streamName:

danielh I agree with you that beside JENKINSFILE_PATH some STREAM_AT_CHANGE would be even more helpful.

Hi heiko_nardmann

In P4Plugin, the stream is used in the following scenarios:

1. While defining Worksacpe behavior in Manual or Streams.

a) If you are using Manual Workspace Behaviour with a stream.

- Jenkins will pass the value of the field "Stream" from the UI to the streamName field of the class WorkspaceSpec.

- This stream name is used to create a perforce client by ManualWorksacpeImpl.setClient(...).

- Refer to the Config file: src/main/resources/org/jenkinsci/plugins/p4/workspace/WorkspaceSpec/config.jelly

b) If you are using Streams Workspace behavior

- Jenkins will pass the value of the "Stream Codeline" field to the streamName field of the class StreamWorkspaceImpl.

- This stream name is then used to create a perforce client by StreamWorksacpeIml.setClient(...).

- Refer to the config file: src/main/resources/org/jenkinsci/plugins/p4/workspace/StreamWorkspaceImpl/config.jelly

2. Inside the P4Sync step.

- This is used for pipeline jobs. Configured from the "pipeline syntax"

- In P4Sync config you can select "Steram Codeline" while defining workspace source. There is an ui element "Stream".

- Jenkins will pass the value of this UI element to the stream field of the StreamSource.java class.

- This is used to generate the Groovy code which then can be used in the pipeline script.

In the screenshot shared, Manual Workspace Behaviour is used. This will be sent to the server using method step 1.a (explained above).

According to me, if we can get the Jenkinsfile for the virtual stream by creating a workspace using the p4 command line client then it should work in the p4plugin as well.

As the "STREAM_AT_CHANGE" variable is useful, it will be available in the next release

I just want to - by default - use the same stream for later on synching the source code as the one that the Jenkinsfile originates from.

In my case I even analyze the stream spec to avoid synching of binary assets which are not relevant for the current target platform. So yes, I create a manual workspace like

ws = [$class: 'ManualWorkspaceImpl', charset: 'none', cleanup: false, name: clientSpecName, pinHost: false, spec: clientSpec( allwrite: false, backup: false, changeView: changeView, clobber: true, compress: false, line: 'LOCAL', locked: false, modtime: false, rmdir: true, serverID: '', streamName: streamNameAttribute, type: workspaceType, view: manualView)] def scm = perforce( browser: swarm('<some Helix Swarm url>'), credential: p4CredentialId, filter: [latestWithPin(true)], populate: forceClean( have: true, parallel: [enable: true, threads: '4'], pin: changelistToBuildWith, quiet: true ), workspace: ws ) scmVars = checkout changelog: true, scm: scm

in my pipeline code. So far I need to explicitly define the streamName inside my Jenkinsfiles which is in most cases redundant.

By today I've updated the P4 plugin to the new 1.17.0.

Bad luck ... I don't see any P4_STREAM_AT_CHANGE environment variable. My code inside Jenkinsfile looks like

@Library('Nav-CI-Standard') _ echo "Outside pipeline in Jenkinsfile:" echo "Variable is:" echo "env.JENKINSFILE_PATH=${env.JENKINSFILE_PATH}" echo "env=${env.getEnvironment()}" import com.stryker.BuildConfiguration ... some additional code ...

As part of the third echo I have expected to see some P4_STREAM_AT_CHANGE environment variable. Missing ... ![]()

What worries me even more: after doing a git pull and then

grep -rw P4_STREAM_AT_CHANGE

I don't see any reference to P4_STREAM_AT_CHANGE beside the implementation inside

./src/main/java/org/jenkinsci/plugins/p4/build/P4StreamEnvironmentContributionAction.java

? And of course inside the RELEASE.md ...

But ... where do I find the corr. unit test?

Stream_At_Change is available inside the pipeline tag similar to other variables like P4_PORT. This is done as these variables are populated only after check-out and sync step. To access these variables for further use

pipeline {

agent any stages {

stage("Repro") {

steps {

script {

echo "Inside pipeline in Jenkinsfile:"

echo "Variable is:"

echo "env.JENKINSFILE_PATH=${env.JENKINSFILE_PATH}" }

} } } }

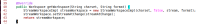

To see how P4_STREAM_AT_CHANGE is getting updated, please refer following:

Line number 584 in PerforceSCM.java is used to set this variable. Please look into this method of PerforceSCM.java.

private void setStreamEnvVariables(Run<?, ?> run, Workspace ws)

This function was tested with our system test suite. We can add a unit test case in next release from our test suite.

Pity ... I've expected that the checkout of the library would set this now as well.

Can I maybe perform some kind of dummy checkout to reach my goal? As a workaround until this problem is really solved?

Okay, probably not a good idea ... this might confuse Jenkins wrt. determining changes even more.

Yes, please add the corr. unit tests soon. System tests typically miss a number of corner cases.

Hi patdhaval30 ,

based on what Karl has reproduced - see https://issues.jenkins.io/browse/JENKINS-39107?focusedId=446317&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-446317 - I'm not sure whether I would call this problem fixed now.

It might be that things have been approved in a number of usecases; but for my usecase - the reason for creating this issue - the problem still exists.

From time to time I also wonder whether I should try to fix this on my own but for that the missing unit tests need to be provided so that coverage is improved when doing local tests. Which is another reason why the ticket should not be considered to be fixed.

Okay, maybe the philosophy among developers varies here: at least in our companys teams a feature is considered to be implemented if at least unit tests are available and the corr. code coverage is sufficient. Automated system tests is of course a different thing ...

Do you agree with me that we should reopen this ticket?

Hi heiko_nardmann ,

Agreed, we have internally created a new ticket to add test cases. We will close this issue when test cases are added.

Thanks.

Could someone review the following code? It seems to work fine for me:

import org.jenkinsci.plugins.workflow.job.WorkflowJob import org.jenkinsci.plugins.workflow.cps.CpsScmFlowDefinition import org.jenkinsci.plugins.p4.PerforceScm import org.jenkinsci.plugins.p4.workspace.StreamWorkspaceImpl import org.jenkinsci.plugins.p4.workspace.ManualWorkspaceImpl /** * @brief for the current build get the stream path that is configured to access the Jenkinsfile * inside the job * * @return stream path or null if it could not be determined */ String getStreamForJenkinsfile() { def rawBuildObj = currentBuild.getRawBuild() def currentJobObj = rawBuildObj.project if ( !currentJobObj ) { echo "ERROR: no job object found!" return null } if ( !(currentJobObj instanceof WorkflowJob) ) { echo "ERROR: job is not an instance of WorkflowJob!" return null } def definition = currentJobObj.getDefinition() if ( !(definition instanceof CpsScmFlowDefinition) ) { return null } SCM scm = definition.getScm() if ( !(scm instanceof org.jenkinsci.plugins.p4.PerforceScm) ) { return null } def workspace = scm.getWorkspace() if ( !(workspace instanceof StreamWorkspaceImpl) && !(workspace instanceof ManualWorkspaceImpl) ) { return null } def streamName if ( workspace instanceof StreamWorkspaceImpl ) { streamName = workspace.getStreamName() } else if ( workspace instanceof ManualWorkspaceImpl ) { streamName = workspace.getSpec().getStreamName() } else { return null } return streamName } // end of getStreamForJenkinsfile()

Based on what I've provided in my previous post there might be a better/cleaner way to get hands on the stream name for the Jenkinsfile. But for now this workaround seems to be okay.

Hi,

heiko_nardmann While STRAM_AT_CHANGE can be accessed via variable. The code written is a good way to access STREAM name.

Could you consider adding corr. code to the plugin so that my request as defined by this ticket finally gets resolved?

heiko_nardmann You would like to see stream name in which the jenkinsfile is present in a variable?

If there are multiple jenkinsfile then will the expectation be to show stream name of latest jenkins file in the variable and overwrite the earlier file stream name?

Multiple Jenkinsfiles? How is this possible with a pipeline job? Could you provide an example? Maybe a screenshot of job configuration page?

So far I thought that one can only specify a single Jenkinsfile. How would the pipeline know otherwise which one to use?

I've to admit we have never used this. But from https://help.perforce.com/helix-core/integrations-plugins/p4jenkins/current/Content/P4Jenkins/multibranch-pipeline-setup.html ...

Jenkins will now probe each child stream associated with the Include streams path //streams/... (effectively all the streams returned by the command p4 streams //streams/...), if the probe finds a Jenkinsfile in the stream then it creates a Pipeline Job and schedules a build.

This sounds like each build has its own single Jenkinsfile?

To my understanding every build can only be based on a single Jenkinsfile.

And looking at this multi branch thing: this seems to be a perfect situation where one wants to know which stream one operates on in a single build.

In this situation, would you expect the variable to contain stream name of latest stream from which jenkinsfile is run?

Hmmm ... your answer tells me that we don't have a common understanding of the situation. Probably me because I haven't used multi branch so far. I try to depict my understanding based on the documentation:

- given is a multi branch job specified to look for streams

- let us assume that there are e.g. three streams //streams/A, //streams/B, and //streams/C

- each stream has its own Jenkinsfile

- //streams/A/Jenkinsfile

- //streams/B/Jenkinsfile

- //streams/C/Jenkinsfile

Now from structure to behaviour:

- as part of the multi branch job Jenkins probes for streams and find the streams given above

- three pipeline jobs are created:

- for //streams/A based on //streams/A/Jenkinsfile

- for //streams/B based on //streams/B/Jenkinsfile

- for //streams/C based on //streams/C/Jenkinsfile

- based on these jobs three builds are triggered

- a build A based on //streams/A/Jenkinsfile

- a build B based on //streams/B/Jenkinsfile

- a build C based on //streams/C/Jenkinsfile

Now my assumption is that each of these builds only knows about its own Jenkinsfile. So ... with these builds running in parallel gives us

- build A returns //streams/A/Jenkinsfile when echoing environment variable JENKINSFILE_PATH

- build B returns //streams/B/Jenkinsfile when echoing environment variable JENKINSFILE_PATH

- build "C" returns //streams/C/Jenkinsfile when echoing environment variable JENKINSFILE_PATH

Same for some variable P4_STREAM (which needs to be introduced):

- build A returns //streams/A when echoing environment variable P4_STREAM

- build B returns //streams/B when echoing environment variable P4_STREAM

- build C returns //streams/C when echoing environment variable P4_STREAM

In this scenario there is nothing which I would call a "latest stream".

Could you please check where my understanding is wrong?

Okay, maybe ... my example code uses the configuration of a non multi branch job. I see that there might be some race condition if a user changes configuration right after a build has been triggered. I ignore this race condition as this case is far too rare for me. Maybe I have another look at APIs to see whether I can access the necessary information in a more dynamic way - via build object.

And yes, a multi branch job always ![]() dynamically determines all of this. So accessing the (static) configuration does not make sense at all. This also needs to be handled dynamically.

dynamically determines all of this. So accessing the (static) configuration does not make sense at all. This also needs to be handled dynamically.

Or does

Jenkins will now probe each child stream associated with the Include streams path //streams/... (effectively all the streams returned by the command p4 streams //streams/...), if the probe finds a Jenkinsfile in the stream then it creates a Pipeline Job and schedules a build.

mean that really a new job is created persistently? With its own configuration? As already stated ... no experience with multi branch setups.

Multi branch seems to make sense if you have a single component built by multiple branches/streams. In our case with a quite large application consisting out of many components we have a lot of Jenkinsfiles inside a single branch/stream which all need to be built. So ...

- multi branch:

component to branch: 1:n - my scenario:

component to branch: n:1

So whenever we branch from mainline/trunk we also "branch" the corresponding Jenkins pipeline jobs.

Maybe the reason for this different approach is that we host multiple large applications with shared components inside a large mono repo/mainline?

heiko_nardmann Apologies for the confusion. Your understadning is correct. We will add it in p4plugin 1.17.2

Reports that this does not work when libraries have been loaded first.

e.g.

import GLOBAL Lib ... p4syncNeed to confirm with a test case.