-

Bug

-

Resolution: Fixed

-

Minor

-

None

-

jenkins 2.111,

workflow-durable-task-step 2.19

kubernetes-1.6.0

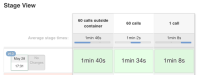

We have recently updated our Jenkins installation from 2.101 to 2.111 including every plugin related to pipeline.

Since this update every shell «sh» invocation is really slower than before. shell invocation was taking few millisecond before. it is now taking seconds.

So job that were taking 1:30 minutes or taking up to 25:00 minutes.

We are trying to figure out which plugin is related

- links to