-

Bug

-

Resolution: Duplicate

-

Major

-

None

-

Jenkins 1.50.3, kubernetes plugin 1.14.8

When communication between the JNLP slave and the Jenkins master fails, Pods are left in an Error state, and in some cases require manual intervention to clean up. Non-JNLP containers are left running and consuming resources in kubernetes.

Expected behavior would be to:

- Fail the job if a suitable Pod can't be started to run the build

- Make sure that any unsuccessful Pods are cleaned up by the kubernetes plugin

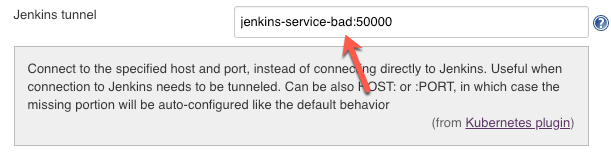

One way to reproduce this issue is by changing the Jenkins tunnel value to something invalid.

This issue also happens even when the Jenkins tunnel reference is correct...

When a job is run, the Console will show that it is waiting for a node

Started by user Admin

Running in Durability level: MAX_SURVIVABILITY

[Pipeline] Start of Pipeline

[Pipeline] podTemplate

[Pipeline] {

[Pipeline] node

Still waiting to schedule task

‘Jenkins’ doesn’t have label ‘mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a’

Meanwhile, in kubernetes, many Pods will be created and each Pod left in Error state

~ # kubectl get pod -o wide --watch NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES jenkins-deployment-67c96975d-khpvb 2/2 Running 0 3d4h 10.233.66.66 high-memory-node-5 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-72h55 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-72h55 0/2 Pending 0 0s <none> high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-72h55 0/2 ContainerCreating 0 0s <none> high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-72h55 2/2 Running 0 4s 10.233.98.99 high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-72h55 1/2 Error 0 5s 10.233.98.99 high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-0gf7v 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-0gf7v 0/2 Pending 0 0s <none> high-memory-node-2 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-0gf7v 0/2 ContainerCreating 0 1s <none> high-memory-node-2 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-0gf7v 2/2 Running 0 3s 10.233.119.99 high-memory-node-2 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-0gf7v 1/2 Error 0 4s 10.233.119.99 high-memory-node-2 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-6ggw6 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-6ggw6 0/2 Pending 0 0s <none> high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-6ggw6 0/2 ContainerCreating 0 1s <none> high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-6ggw6 2/2 Running 0 4s 10.233.72.223 high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-6ggw6 1/2 Error 0 5s 10.233.72.223 high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-c7dxb 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-c7dxb 0/2 Pending 0 0s <none> high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-c7dxb 0/2 ContainerCreating 0 1s <none> high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-c7dxb 2/2 Running 0 3s 10.233.72.224 high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-c7dxb 1/2 Error 0 5s 10.233.72.224 high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-v0tqq 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-v0tqq 0/2 Pending 0 0s <none> high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-v0tqq 0/2 ContainerCreating 0 0s <none> high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-v0tqq 2/2 Running 0 2s 10.233.78.100 high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-v0tqq 1/2 Error 0 4s 10.233.78.100 high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-pntgv 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-pntgv 0/2 Pending 0 0s <none> high-memory-node-1 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-pntgv 0/2 ContainerCreating 0 1s <none> high-memory-node-1 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-pntgv 2/2 Running 0 2s 10.233.95.100 high-memory-node-1 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-pntgv 1/2 Error 0 4s 10.233.95.100 high-memory-node-1 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-5ncq0 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-5ncq0 0/2 Pending 0 1s <none> high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-5ncq0 0/2 ContainerCreating 0 1s <none> high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-5ncq0 2/2 Running 0 4s 10.233.98.100 high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-5ncq0 1/2 Error 0 5s 10.233.98.100 high-memory-node-3 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-dnssr 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-dnssr 0/2 Pending 0 0s <none> high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-dnssr 0/2 ContainerCreating 0 0s <none> high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-dnssr 2/2 Running 0 3s 10.233.78.101 high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-dnssr 1/2 Error 0 4s 10.233.78.101 high-memory-node-4 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-2tcb1 0/2 Pending 0 0s <none> <none> <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-2tcb1 0/2 Pending 0 0s <none> high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-2tcb1 0/2 ContainerCreating 0 1s <none> high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-2tcb1 2/2 Running 0 2s 10.233.72.225 high-memory-node-0 <none> <none> mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-2tcb1 1/2 Error 0 4s 10.233.72.225 high-memory-node-0 <none> <none>

A describe of one of the Pods shows this

~ # kubectl describe pod mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2

Name: mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2

Namespace: jenkins-master-test

Priority: 0

PriorityClassName: <none>

Node: high-memory-node-2/10.0.40.14

Start Time: Mon, 04 Mar 2019 21:35:11 +0000

Labels: jenkins=slave

jenkins/mypod-e549650e-86ea-43b1-946e-95f307b255b9=true

Annotations: buildUrl: http://jenkins-service:8080/job/testmaxconn/147/

kubernetes.io/limit-ranger:

LimitRanger plugin set: cpu, memory request for container alpine; cpu, memory limit for container alpine; cpu, memory request for containe...

Status: Running

IP: 10.233.119.104

Containers:

alpine:

Container ID: docker://80c1d2d396f1ece3c2c78c2335d78e482cca1899ff2f29047cc4d06892215d04

Image: alpine

Image ID: docker-pullable://alpine@sha256:b3dbf31b77fd99d9c08f780ce6f5282aba076d70a513a8be859d8d3a4d0c92b8

Port: <none>

Host Port: <none>

Command:

cat

State: Running

Started: Mon, 04 Mar 2019 21:35:13 +0000

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 25m

memory: 256Mi

Environment:

JENKINS_SECRET: 8e1c8742aa520eebf3ccff2bbf4855c270dbfbe08fea032b8e81dfd921ff9afa

JENKINS_TUNNEL: jenkins-service-bad:50000

JENKINS_AGENT_NAME: mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2

JENKINS_NAME: mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2

JENKINS_URL: http://jenkins-service:8080/

HOME: /home/jenkins

Mounts:

/home/jenkins from workspace-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-qjfqj (ro)

jnlp:

Container ID: docker://cc4875a887ec33e3c4504378e9cfc4a55a0179b433305ac686223cc4165a4307

Image: jenkins/jnlp-slave:alpine

Image ID: docker-pullable://jenkins/jnlp-slave@sha256:8e330d8bc461440c797d543b9872e54b328da3ef3b052180bb5aed33204d4384

Port: <none>

Host Port: <none>

State: Terminated

Reason: Error

Exit Code: 255

Started: Mon, 04 Mar 2019 21:35:13 +0000

Finished: Mon, 04 Mar 2019 21:35:14 +0000

Ready: False

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 25m

memory: 256Mi

Environment:

JENKINS_SECRET: 8e1c8742aa520eebf3ccff2bbf4855c270dbfbe08fea032b8e81dfd921ff9afa

JENKINS_TUNNEL: jenkins-service-bad:50000

JENKINS_AGENT_NAME: mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2

JENKINS_NAME: mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2

JENKINS_URL: http://jenkins-service:8080/

HOME: /home/jenkins

Mounts:

/home/jenkins from workspace-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-qjfqj (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

workspace-volume:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

default-token-qjfqj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-qjfqj

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 30s default-scheduler Successfully assigned jenkins-master-test/mypod-e549650e-86ea-43b1-946e-95f307b255b9-51lkb-d16h2 to high-memory-node-2

Normal Pulled 28s kubelet, high-memory-node-2 Container image "alpine" already present on machine

Normal Created 28s kubelet, high-memory-node-2 Created container

Normal Started 28s kubelet, high-memory-node-2 Started container

Normal Pulled 28s kubelet, high-memory-node-2 Container image "jenkins/jnlp-slave:alpine" already present on machine

Normal Created 28s kubelet, high-memory-node-2 Created container

Normal Started 28s kubelet, high-memory-node-2 Started container

The logs from the JNLP container in one of the Error Pods are

~ # kubectl logs mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-nx55q jnlp~ # kubectl logs mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-nx55q jnlp Warning: JnlpProtocol3 is disabled by default, use JNLP_PROTOCOL_OPTS to alter the behavior Mar 04, 2019 9:22:33 PM hudson.remoting.jnlp.Main createEngine INFO: Setting up agent: mypod-cadb7e5a-1337-414d-abd2-a7ca3653a33a-tlt12-nx55q Mar 04, 2019 9:22:33 PM hudson.remoting.jnlp.Main$CuiListener <init> INFO: Jenkins agent is running in headless mode. Mar 04, 2019 9:22:33 PM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3.27 Mar 04, 2019 9:22:33 PM hudson.remoting.Engine startEngine WARNING: No Working Directory. Using the legacy JAR Cache location: /home/jenkins/.jenkins/cache/jars Mar 04, 2019 9:22:34 PM hudson.remoting.jnlp.Main$CuiListener status INFO: Locating server among [http://jenkins-service:8080/] Mar 04, 2019 9:22:34 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Mar 04, 2019 9:22:34 PM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting TCP connection tunneling is enabled. Skipping the TCP Agent Listener Port availability check Mar 04, 2019 9:22:34 PM hudson.remoting.jnlp.Main$CuiListener status INFO: Agent discovery successful Agent address: jenkins-service-bad Agent port: 50000 Identity: 51:84:00:05:77:43:f0:57:4e:08:d9:22:55:61:c6:8a Mar 04, 2019 9:22:34 PM hudson.remoting.jnlp.Main$CuiListener status INFO: Handshaking Mar 04, 2019 9:22:34 PM hudson.remoting.jnlp.Main$CuiListener status INFO: Connecting to jenkins-service-bad:50000 Mar 04, 2019 9:22:34 PM hudson.remoting.jnlp.Main$CuiListener error SEVERE: null java.nio.channels.UnresolvedAddressException at sun.nio.ch.Net.checkAddress(Net.java:101) at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622) at java.nio.channels.SocketChannel.open(SocketChannel.java:189) at org.jenkinsci.remoting.engine.JnlpAgentEndpoint.open(JnlpAgentEndpoint.java:203) at hudson.remoting.Engine.connect(Engine.java:691) at hudson.remoting.Engine.innerRun(Engine.java:552) at hudson.remoting.Engine.run(Engine.java:474)

Another example of a failed JNLP container, this time the JNLP URL was correctAnd all of the Error Pods are still around.

# kubectl logs jenkins-slave-g6q4s-5jz7b jnlp# kubectl logs jenkins-slave-g6q4s-5jz7b jnlp Warning: JnlpProtocol3 is disabled by default, use JNLP_PROTOCOL_OPTS to alter the behavior Feb 27, 2019 12:52:59 AM hudson.remoting.jnlp.Main createEngine INFO: Setting up agent: jenkins-slave-g6q4s-5jz7b Feb 27, 2019 12:52:59 AM hudson.remoting.jnlp.Main$CuiListener <init> INFO: Jenkins agent is running in headless mode. Feb 27, 2019 12:52:59 AM hudson.remoting.Engine startEngine INFO: Using Remoting version: 3.26 Feb 27, 2019 12:52:59 AM hudson.remoting.Engine startEngine WARNING: No Working Directory. Using the legacy JAR Cache location: /home/jenkins/.jenkins/cache/jars Feb 27, 2019 12:52:59 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Locating server among [http://jenkins-service:8080/] Feb 27, 2019 12:53:00 AM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting server accepts the following protocols: [JNLP4-connect, Ping] Feb 27, 2019 12:53:00 AM org.jenkinsci.remoting.engine.JnlpAgentEndpointResolver resolve INFO: Remoting TCP connection tunneling is enabled. Skipping the TCP Agent Listener Port availability check Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Agent discovery successful Agent address: jenkins-service Agent port: 50000 Identity: 24:e1:66:dc:61:8a:05:cf:65:25:46:d5:59:57:12:be Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Handshaking Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Connecting to jenkins-service:50000 Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Trying protocol: JNLP4-connect Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Remote identity confirmed: 24:e1:66:dc:61:8a:05:cf:65:25:46:d5:59:57:12:be Feb 27, 2019 12:53:00 AM org.jenkinsci.remoting.protocol.impl.ConnectionHeadersFilterLayer onRecv INFO: [JNLP4-connect connection to jenkins-service/10.233.13.248:50000] Local headers refused by remote: Unknown client name: jenkins-slave-g6q4s-5jz7b Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Protocol JNLP4-connect encountered an unexpected exception java.util.concurrent.ExecutionException: org.jenkinsci.remoting.protocol.impl.ConnectionRefusalException: Unknown client name: jenkins-slave-g6q4s-5jz7b at org.jenkinsci.remoting.util.SettableFuture.get(SettableFuture.java:223) at hudson.remoting.Engine.innerRun(Engine.java:614) at hudson.remoting.Engine.run(Engine.java:474) Caused by: org.jenkinsci.remoting.protocol.impl.ConnectionRefusalException: Unknown client name: jenkins-slave-g6q4s-5jz7b at org.jenkinsci.remoting.protocol.impl.ConnectionHeadersFilterLayer.newAbortCause(ConnectionHeadersFilterLayer.java:378) at org.jenkinsci.remoting.protocol.impl.ConnectionHeadersFilterLayer.onRecvClosed(ConnectionHeadersFilterLayer.java:433) at org.jenkinsci.remoting.protocol.ProtocolStack$Ptr.onRecvClosed(ProtocolStack.java:832) at org.jenkinsci.remoting.protocol.FilterLayer.onRecvClosed(FilterLayer.java:287) at org.jenkinsci.remoting.protocol.impl.SSLEngineFilterLayer.onRecvClosed(SSLEngineFilterLayer.java:172) at org.jenkinsci.remoting.protocol.ProtocolStack$Ptr.onRecvClosed(ProtocolStack.java:832) at org.jenkinsci.remoting.protocol.NetworkLayer.onRecvClosed(NetworkLayer.java:154) at org.jenkinsci.remoting.protocol.impl.BIONetworkLayer.access$1500(BIONetworkLayer.java:48) at org.jenkinsci.remoting.protocol.impl.BIONetworkLayer$Reader.run(BIONetworkLayer.java:247) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at hudson.remoting.Engine$1.lambda$newThread$0(Engine.java:93) at java.lang.Thread.run(Thread.java:748) Suppressed: java.nio.channels.ClosedChannelException ... 7 more Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Connecting to jenkins-service:50000 Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Server reports protocol JNLP4-plaintext not supported, skipping Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Protocol JNLP3-connect is not enabled, skipping Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Server reports protocol JNLP2-connect not supported, skipping Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener status INFO: Server reports protocol JNLP-connect not supported, skipping Feb 27, 2019 12:53:00 AM hudson.remoting.jnlp.Main$CuiListener error SEVERE: The server rejected the connection: None of the protocols were accepted java.lang.Exception: The server rejected the connection: None of the protocols were accepted at hudson.remoting.Engine.onConnectionRejected(Engine.java:675) at hudson.remoting.Engine.innerRun(Engine.java:639) at hudson.remoting.Engine.run(Engine.java:474)

In this case, the Pod was still around in an Error state five days later.

- duplicates

-

JENKINS-54540 Pods stuck in error state is not cleaned up

-

- Open

-