-

Type:

Bug

-

Resolution: Unresolved

-

Priority:

Minor

-

Component/s: git-parameter-plugin, jobconfighistory-plugin

-

Environment:Jenkins 2.332.1; Job Configuration History Version1119.v509e1017356b_; Git Parameter 0.9.15

Git-parameter plugin set with BRANCH as a name causes Job Configuration History plugin to write non-informative entries regarding UUID change. This lead to a cluttered history in jobconfighistory that takes the space on a server.

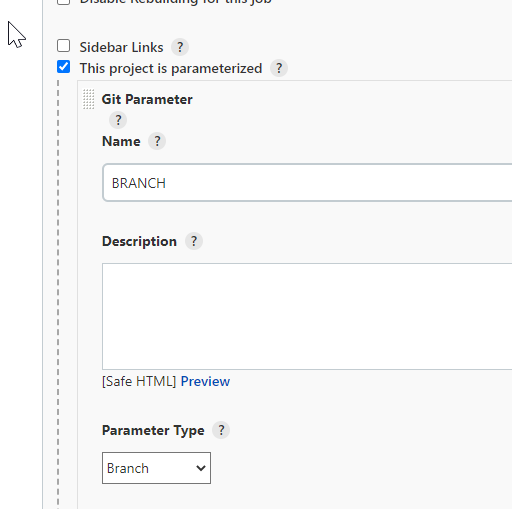

Task settings causing the issue:

Steps to reproduce:

- create at least 2 branches in your git repo

- set any pipeline with Git parameter Name = BRANCH and Branches to build > Branch Specifier (blank for 'any') specifying one of 2 branches

- Click Build with Parameters, selecting the branch you specified

- Now change task configuration in Configure menu and change the branch name in Branches to build to another branch of your repository

- Click Build with Parameters, selecting the branch you specified this time

- Open Job Configuration History

Actual result: there are 2 entries appeared with the only change: different UUIDs

Impact: when the task is run a lot of times during the day, the history in jobconfighistory is cluttered and is not informative, taking up the disk space on a server and can potentially lead to the lack of inodes on a server.

What is expected: need to get rid of entries in the Job Config History related to UUID change from the Git parameter plugin. Since the task is automatically executed several times a day, need to exclude such logging to save the space and improve readability in the Job Config History.

There is similar issue already reported, but for another plugin:

https://issues.jenkins.io/browse/JENKINS-64759

- relates to

-

JENKINS-64759 list-git-branches-parameter generate a config.xml change on every run

-

- Open

-