-

Bug

-

Resolution: Fixed

-

Blocker

-

OS version: CentOS 7.8

Jenkins version: 2.249.1

Plugin version:Active Directory 2.17

openJDK version 1.8.0_262

-

-

active-directory-2.18

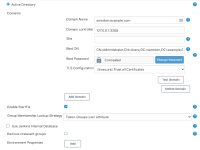

After upgrading Active-Directory Plugin from 2.16 to 2.17 on three separate Jenkins servers, each server shortly started to give login errors and then became unresponsive via the UI (nginx reporting HTTP 502).

The log contained the following:

2020-09-18 13:47:43.269+0000 [id=1418] WARNING h.i.i.InstallUncaughtExceptionHandler#handleException: Caught unhandled exception with ID cb4cc28e-5c87-4536-9e45-a191e1512e0e

java.lang.OutOfMemoryError: unable to create new native thread

at java.lang.Thread.start0(Native Method)

at java.lang.Thread.start(Thread.java:717)

at com.sun.jndi.ldap.Connection.<init>(Connection.java:244)

at com.sun.jndi.ldap.LdapClient.<init>(LdapClient.java:137)

at com.sun.jndi.ldap.LdapClient.getInstance(LdapClient.java:1609)

at com.sun.jndi.ldap.LdapCtx.connect(LdapCtx.java:2749)

at com.sun.jndi.ldap.LdapCtx.<init>(LdapCtx.java:319)

at com.sun.jndi.ldap.LdapCtxFactory.getUsingURL(LdapCtxFactory.java:192)

at com.sun.jndi.ldap.LdapCtxFactory.getLdapCtxInstance(LdapCtxFactory.java:151)

at hudson.plugins.active_directory.ActiveDirectorySecurityRealm$DescriptorImpl.bind(ActiveDirectorySecurityRealm.java:623)

at hudson.plugins.active_directory.ActiveDirectorySecurityRealm$DescriptorImpl.bind(ActiveDirectorySecurityRealm.java:554)

at hudson.plugins.active_directory.ActiveDirectoryUnixAuthenticationProvider$1.call(ActiveDirectoryUnixAuthenticationProvider.java:353)

at hudson.plugins.active_directory.ActiveDirectoryUnixAuthenticationProvider$1.call(ActiveDirectoryUnixAuthenticationProvider.java:336)

at com.google.common.cache.LocalCache$LocalManualCache$1.load(LocalCache.java:4767)

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3568)

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2350)

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2313)

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2228)

Caused: com.google.common.util.concurrent.ExecutionError

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2232)

at com.google.common.cache.LocalCache.get(LocalCache.java:3965)

at com.google.common.cache.LocalCache$LocalManualCache.get(LocalCache.java:4764)

at hudson.plugins.active_directory.ActiveDirectoryUnixAuthenticationProvider.retrieveUser(ActiveDirectoryUnixAuthenticationProvider.java:336)

at hudson.plugins.active_directory.ActiveDirectoryUnixAuthenticationProvider.retrieveUser(ActiveDirectoryUnixAuthenticationProvider.java:299)

at hudson.plugins.active_directory.ActiveDirectoryUnixAuthenticationProvider.retrieveUser(ActiveDirectoryUnixAuthenticationProvider.java:225)

at hudson.plugins.active_directory.ActiveDirectorySecurityRealm.authenticate(ActiveDirectorySecurityRealm.java:831)

at hudson.security.AbstractPasswordBasedSecurityRealm.doAuthenticate(AbstractPasswordBasedSecurityRealm.java:72)

at hudson.security.AbstractPasswordBasedSecurityRealm.access$000(AbstractPasswordBasedSecurityRealm.java:31)

at hudson.security.AbstractPasswordBasedSecurityRealm$Authenticator.retrieveUser(AbstractPasswordBasedSecurityRealm.java:106)

at org.acegisecurity.providers.dao.AbstractUserDetailsAuthenticationProvider.authenticate(AbstractUserDetailsAuthenticationProvider.java:122)

at org.acegisecurity.providers.ProviderManager.doAuthentication(ProviderManager.java:200)

at org.acegisecurity.AbstractAuthenticationManager.authenticate(AbstractAuthenticationManager.java:47)

at org.acegisecurity.ui.webapp.AuthenticationProcessingFilter.attemptAuthentication(AuthenticationProcessingFilter.java:74)

at org.acegisecurity.ui.AbstractProcessingFilter.doFilter(AbstractProcessingFilter.java:252)

at hudson.security.ChainedServletFilter$1.doFilter(ChainedServletFilter.java:87)

at jenkins.security.BasicHeaderProcessor.doFilter(BasicHeaderProcessor.java:93)

at hudson.security.ChainedServletFilter$1.doFilter(ChainedServletFilter.java:87)

at org.acegisecurity.context.HttpSessionContextIntegrationFilter.doFilter(HttpSessionContextIntegrationFilter.java:249)

at hudson.security.HttpSessionContextIntegrationFilter2.doFilter(HttpSessionContextIntegrationFilter2.java:67)

at hudson.security.ChainedServletFilter$1.doFilter(ChainedServletFilter.java:87)

at hudson.security.ChainedServletFilter.doFilter(ChainedServletFilter.java:90)

at hudson.security.HudsonFilter.doFilter(HudsonFilter.java:171)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1618)

at org.kohsuke.stapler.compression.CompressionFilter.doFilter(CompressionFilter.java:51)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1618)

at hudson.util.CharacterEncodingFilter.doFilter(CharacterEncodingFilter.java:82)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1618)

at org.kohsuke.stapler.DiagnosticThreadNameFilter.doFilter(DiagnosticThreadNameFilter.java:30)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1618)

at jenkins.security.SuspiciousRequestFilter.doFilter(SuspiciousRequestFilter.java:36)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1618)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:549)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:143)

at org.eclipse.jetty.security.SecurityHandler.handle(SecurityHandler.java:578)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:235)

at org.eclipse.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1610)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233)

at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1369)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:188)

at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:489)

at org.eclipse.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1580)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:186)

at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1284)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.server.Server.handle(Server.java:501)

at org.eclipse.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:383)

at org.eclipse.jetty.server.HttpChannel.dispatch(HttpChannel.java:556)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:375)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:272)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:103)

at org.eclipse.jetty.io.ChannelEndPoint$1.run(ChannelEndPoint.java:104)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:336)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:313)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:171)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:129)

at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:375)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:806)

at org.eclipse.jetty.util.thread.QueuedThreadPool$Runner.run(QueuedThreadPool.java:938)

at java.lang.Thread.run(Thread.java:748)

With the references to Active Directory, I rolled back the AD plugin from 2.17 to 2.16. Once this was done all three Jenkins servers worked just fine once again.

This is what JavaMelody Monitoring shows for Threads Count:

- links to