-

Type:

Bug

-

Resolution: Fixed

-

Priority:

Critical

-

Component/s: workflow-cps-plugin

-

Environment:Jenkins: 2.7.4 (affecting 1.6 too)

Pipeline: 2.3 (tested on fresh install with latest dependencies)

Environment setup;

1. Run "docker run -p 8080:8080 -p 50000:50000 jenkins"

2. Browse to http://localhost:8080

3. Perform initial jenkins setup - install 'pipeline' plugin

I'm experiencing some odd behaviour with the parallel step related to variable scoping. The following minimal pipeline script demonstrates my problem.

def fn = { val -> println val }

parallel([

a: { fn('a') },

b: { fn('b') }

])

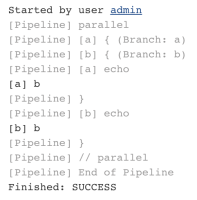

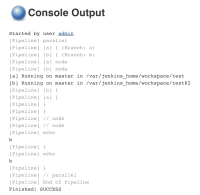

Expected output

a b

(or b then a, order of execution should be undefined)

Actual output

b b

- duplicates

-

JENKINS-26481 Mishandling of binary methods accepting Closure

-

- Resolved

-

- is duplicated by

-

JENKINS-36964 Parallel Docker pipeline

-

- Resolved

-

- is related to

-

JENKINS-55040 Problem sharing data objects between parallel build stages

-

- Resolved

-

- relates to

-

JENKINS-38052 Pipeline parallel map not supporting curried closures

-

- Resolved

-

-

JENKINS-44746 Groovy bug when creating Closure in loop

-

- Resolved

-

- links to