-

Type:

Bug

-

Resolution: Duplicate

-

Priority:

Critical

-

Component/s: google-compute-engine-plugin

-

None

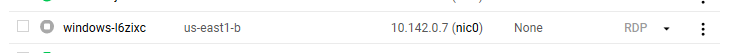

Occasionally this plugin leaves orphaned VMs after they are terminated / no longer used by this plugin, left in a stopped state:

This wastes compute resources and costs.

Ideally the plugin would not do this, but in addition, having a periodic (every 5 minutes) check to go through the current VMs in the project, see which ones are tagged with "jenkins" and then automatically terminate any VMs tagged with that and not known to Jenkins. This would make it resilient against unexpected Jenkins restarts, etc. (though it should be an option in case multiple Jenkins instances share the same GCE project).