-

Type:

Improvement

-

Resolution: Fixed

-

Priority:

Minor

-

Component/s: pipeline-model-definition-plugin

-

Environment:Fresh Jenkins LTS 2.150.3 install

'recommended' plugins, including [Pipeline: Declarative Plugin|https://plugins.jenkins.io/pipeline-model-definition] version 1.3.6

-

pipeline-model-definition 1.3.7

Symptom

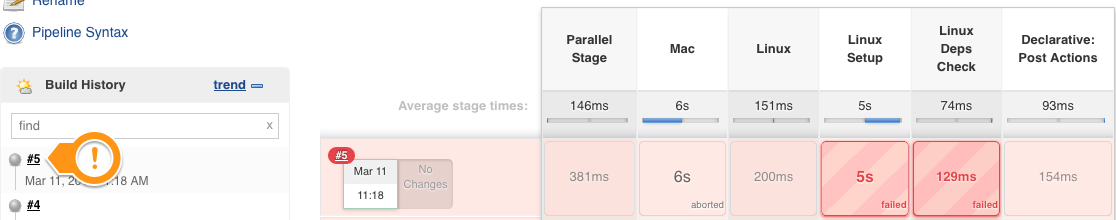

With a Pipeline using parallel stages with failFast true enabled, when a stage with an agent fails, the final build result is showing as ABORTED instead of FAILURE

A similar bug was recently fixed JENKINS-55459 which corrected the build status result when using non-nested parallel stages with failFast true enabled, but it seems it did not catch the case where a nested stage inside of one of the parallel stages fails.

Evidence

The fix for JENKINS-55459 was delivered under version 1.3.5 of the Pipeline: Declarative Plugin, I am testing version 1.3.6.

I started up a brand new Jenkins LTS 2.150.3 instance, with the 'recommended' plugins, including Pipeline: Declarative Plugin version 1.3.6

I ran the testcase inside of JENKINS-55459 and the build status is correctly marked as failed, so the fix from JENKINS-55459 was correct.

When I run the attached Jenkinsfile![]() , the build still shows as ABORTED:

, the build still shows as ABORTED:

... ERROR: script returned exit code 1 Finished: ABORTED

Here is the full log: log![]()

I expected the build status to be marked as FAILURE, not ABORTED.

Hypothesis

I believe the fix from JENKINS-55459 worked, but does not account for failures inside of stages with agents within the parallel stages.

- relates to

-

JENKINS-55459 failFast option for parallel stages should be able to set build result to FAILED

-

- Closed

-

- links to