-

Type:

Bug

-

Resolution: Not A Defect

-

Priority:

Major

-

Component/s: kubernetes-plugin

Recently we have upgraded Jenkins version from 2.319.1 to 2.361.1 and the Kubernetes plugin version from 1.31.1 to 3704.va_08f0206b_95e

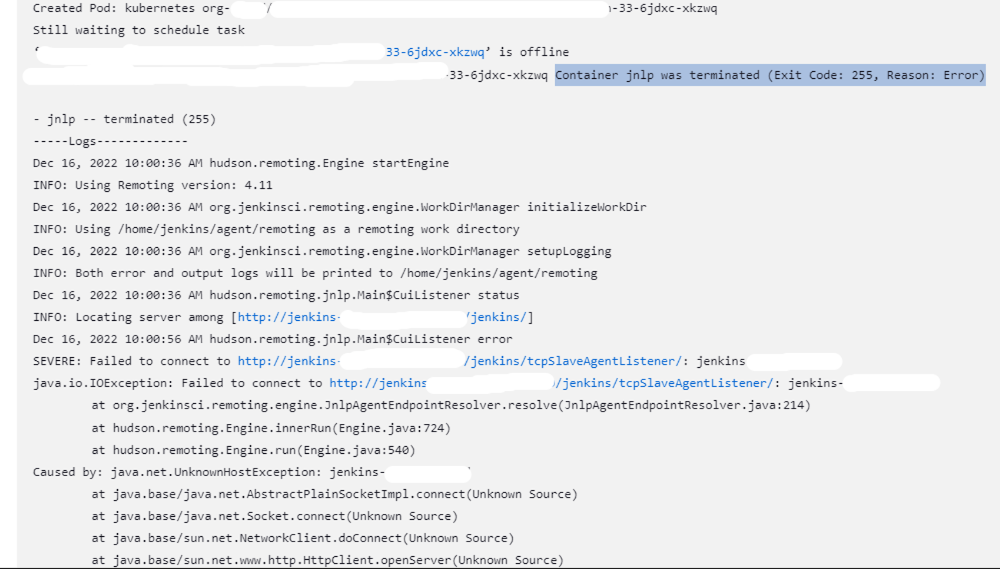

Since this upgrade was done, we have been facing the following error when trying to establish the connection with a JNLP container

This error is happening occasionally and we have not been able to find an identifiable pattern or root cause but it is impacting several production environments.