-

Type:

Bug

-

Resolution: Cannot Reproduce

-

Priority:

Major

-

Component/s: workflow-cps-plugin

-

Environment:Jenkins ver. 2.79

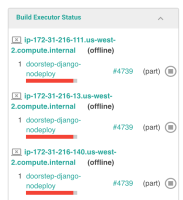

I have a jenkins pipeline that uses the parallel command to execute tests and then at the end I run an "sh" command. Upon examining these pipeline runs which seem "stuck" i find this error message at the end of the console:

java.lang.IllegalStateException: cannot start writing logs to a finished node org.jenkinsci.plugins.workflow.cps.nodes.StepEndNode[id=922] at org.jenkinsci.plugins.workflow.support.actions.LogActionImpl.<init>(LogActionImpl.java:114) at org.jenkinsci.plugins.workflow.support.actions.LogActionImpl.stream(LogActionImpl.java:85) at org.jenkinsci.plugins.workflow.support.DefaultStepContext.get(DefaultStepContext.java:73) at org.jenkinsci.plugins.workflow.cps.steps.ParallelStep$ResultHandler$Callback.onFailure(ParallelStep.java:122) at org.jenkinsci.plugins.workflow.cps.CpsBodyExecution$FailureAdapter.receive(CpsBodyExecution.java:345) at com.cloudbees.groovy.cps.impl.ThrowBlock$1.receive(ThrowBlock.java:68) at com.cloudbees.groovy.cps.impl.ConstantBlock.eval(ConstantBlock.java:21) at com.cloudbees.groovy.cps.Next.step(Next.java:83) at com.cloudbees.groovy.cps.Continuable$1.call(Continuable.java:174) at com.cloudbees.groovy.cps.Continuable$1.call(Continuable.java:163) at org.codehaus.groovy.runtime.GroovyCategorySupport$ThreadCategoryInfo.use(GroovyCategorySupport.java:122) at org.codehaus.groovy.runtime.GroovyCategorySupport.use(GroovyCategorySupport.java:261) at com.cloudbees.groovy.cps.Continuable.run0(Continuable.java:163) at org.jenkinsci.plugins.workflow.cps.SandboxContinuable.access$001(SandboxContinuable.java:19) at org.jenkinsci.plugins.workflow.cps.SandboxContinuable$1.call(SandboxContinuable.java:35) at org.jenkinsci.plugins.workflow.cps.SandboxContinuable$1.call(SandboxContinuable.java:32) at org.jenkinsci.plugins.scriptsecurity.sandbox.groovy.GroovySandbox.runInSandbox(GroovySandbox.java:108) at org.jenkinsci.plugins.workflow.cps.SandboxContinuable.run0(SandboxContinuable.java:32) at org.jenkinsci.plugins.workflow.cps.CpsThread.runNextChunk(CpsThread.java:174) at org.jenkinsci.plugins.workflow.cps.CpsThreadGroup.run(CpsThreadGroup.java:330) at org.jenkinsci.plugins.workflow.cps.CpsThreadGroup.access$100(CpsThreadGroup.java:82) at org.jenkinsci.plugins.workflow.cps.CpsThreadGroup$2.call(CpsThreadGroup.java:242) at org.jenkinsci.plugins.workflow.cps.CpsThreadGroup$2.call(CpsThreadGroup.java:230) at org.jenkinsci.plugins.workflow.cps.CpsVmExecutorService$2.call(CpsVmExecutorService.java:64) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at hudson.remoting.SingleLaneExecutorService$1.run(SingleLaneExecutorService.java:112) at jenkins.util.ContextResettingExecutorService$1.run(ContextResettingExecutorService.java:28) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Finished: ABORTED

My guess as to what is going on is that at our company, we scale jenkins up and down during the day. There's a script that will removea jenkins slave from the master via a script that roughly looks like this:

|#! /bin/bash -ex |cd /var/jenkins_home/war/WEB-INF |java -jar jenkins-cli.jar -auth ${username}:${password} -s http://localhost:8080/ delete-node ${nodeName}

We detach nodes in this way for a variety of reasons but most commonly, it's because a slave node in our Ec2 spot instance fleet request went away and want to detach it from the master.

Big picture, we are probably doing something wrong. However, it seems like this ticket depicts a Jenkins pipeline in a stuck state.

Please advise. I am seeing this exact issue almost daily now. There must be some smart way to recover from this. Ideally, if a slave goes away, Jenkins master is smart enough to somehow re-dispatch the work to be done on some other slave which is still up.