-

Type:

Bug

-

Resolution: Duplicate

-

Priority:

Minor

-

Component/s: workflow-durable-task-step-plugin

-

Environment:Jenkins 2.29

I had a pipeline build running, and then restarted Jenkins. After coming up again, I had this in the log for one of the parallel steps in the build:

Resuming build at Mon Nov 07 13:11:05 CET 2016 after Jenkins restart

Waiting to resume part of Atlassian Bitbucket » honey » master #4: ???

Waiting to resume part of Atlassian Bitbucket » honey » master #4: Waiting for next available executor on bcubuntu32

And the last message repeating every few minutes. The slave bcubuntu32 has only one executor, and it seems like this executor was "used up" for this task of waiting for an available executor...

After I went into the configuration and changed number of executors to 2, the build continued as normal.

A possibly related issue: Before restart, I put Jenkins in quiet mode, but the same build agent hung at the end of the pipeline part that was running, never finishing the build. In the end I made the restart without waiting for the part to finish.

How to reproduce

- In a fresh Jenkins instance, set master executors number to 1

- Create job-1 and job-2 as follow

node { parallel "parallel-1": { sh "true" }, "parallel-2": { sh "true" } } build 'job-2'node { sh "sleep 300" }

Start a build, wait for job-2 node block to start, then restart Jenkins.

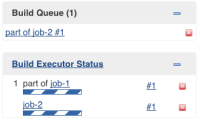

When it comes back online, you'll see a deadlock

It seems job-1 is trying to come back on the node it used before the restart, even though its current state doesn't require any node.

- duplicates

-

JENKINS-53709 Parallel blocks in node blocks cause executors to be persisted outside of the node block

-

- Resolved

-

- relates to

-

JENKINS-41791 Build cannot be resumed if parallel was used with Kubernetes plugin

-

- Resolved

-

-

JENKINS-43587 Pipeline fails to resume after master restart/plugin upgrade

-

- Resolved

-

- links to